AI for Research: Which Models Deliver the Best Facts and Figures?

January 2, 2025

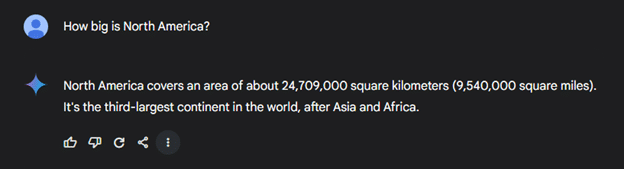

Have you ever typed a specific question into a search engine, only to be met with page after page of unclear answers? This can be frustrating, especially during important research or when the question is time-sensitive. With the rise of AI tools and platforms claiming to boost productivity — often highlighting research assistance — one might wonder: can AI really find specific facts and figures quickly?

To test this notion, I have prepared an exercise in fact finding using three different AI models: ChatGPT, Google Gemini, and Perplexity AI. Each of these AI is easy to use, requiring nothing more than typing your question or prompt into the chat box. ChatGPT and Perplexity don’t even require an account to access their basic functions, while Google Gemini requires a Google account (which you likely already have).

The time between hitting enter on a prompt and getting an answer from one of the above models is typically less than a second.

The rules of the exercise are simple:

- Each question will constitute a round. I’ll ask each AI the same question and present their answers, along with my own research to corroborate them. The questions will cover a variety of subjects to assess each AI’s fact-finding abilities.

- Each AI will have only one chance to answer a round’s question. There will be no follow-ups or prompt tweaking. This exercise is designed to simulate the experience of a new user under a time crunch.

- Accuracy is the priority. I may award bonus points for exceptional quality, but the main focus is ensuring the models don’t hallucinate—i.e., make up details with no basis in reality or their available resources.

Let’s begin.

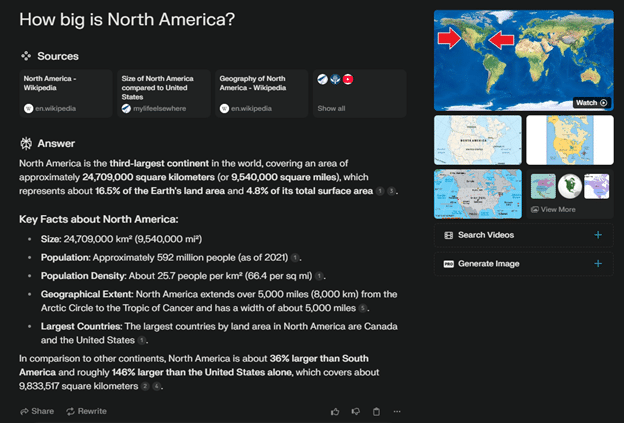

Round 1: How big is North America?

ChatGPT:

Gemini:

Perplexity:

Correct Answer:

It is indeed 9.54 million square miles. All three passed.

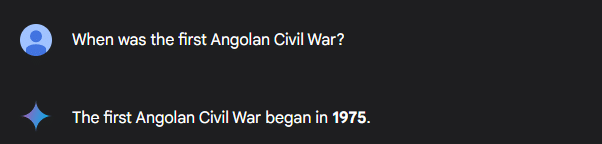

Round 2: When was the first Angolan Civil War

ChatGPT

Gemini

Perplexity

Correct Answer:

The first Angolan Civil War indeed began in 1975. The facts provided by GPT and Perplexity align the summaries from GlobalSecurity.org. However, I noticed they omitted the third faction involved, the Frente Nacional de Libertação de Angola (FNLA). While the FNLA was eventually defeated and forced to retreat from Angola within a few years of the war’s start, it is often glossed over by Western sources.

Round 3: How many daily users does Google see?

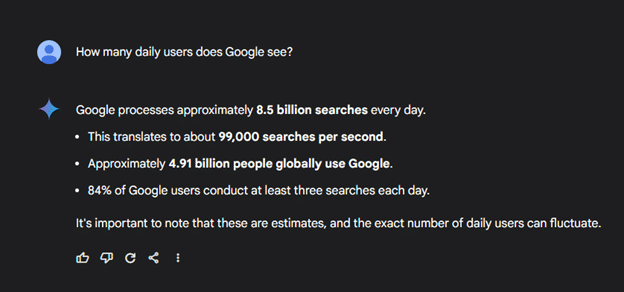

ChatGPT

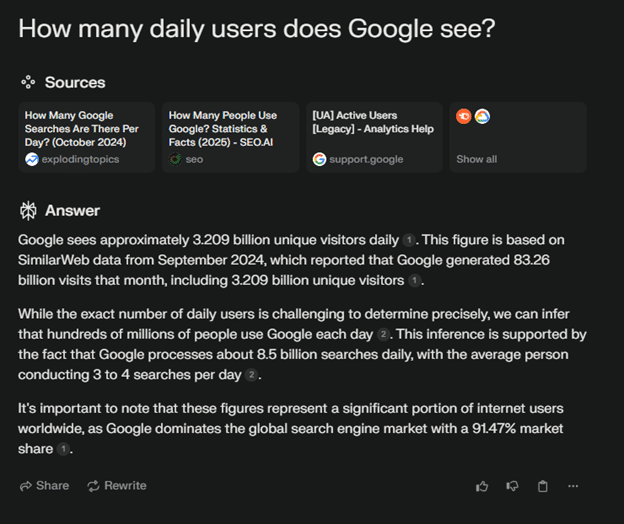

Gemini

Perplexity

Correct Answer:

According to Exploding Topics, which Perplexity explicitly cites as one of its sources, Google has approximately 3.209 billion unique daily users. This figure is somewhat difficult to confirm due to the variance in daily user counts for any service, as well as the AI’s interpretation of the query. This is evident in GPT and Gemini’s responses, which focused on the total user count for Google’s entire service catalog, rather than just Google Search.

Round 4: How many ounces are in a pound?

ChatGPT

Gemini

Perplexity

Correct Answer:

It is indeed 16 ounces to 1 pound. However, while the accuracy of the answers across all three models is the same, the quality of the response is much more robust with Perplexity than with ChatGPT and Gemini. This difference can be attributed to the core design of each model. While GPT and Gemini are primarily designed as a chatbot and a digital assistant, respectively, Perplexity is specifically built as an AI-powered answer engine. This distinction will likely become more apparent with future questions.

Round 5: Is it illegal to carry a switchblade in Virginia?

ChatGPT

Gemini

Perplexity

Correct Answer:

As documented by the American Knife and Tool Institute (AKTI), switchblades became legal to carry under Virginia law as of July 1, 2023. An interesting note is that ChatGPT got this wrong, while the other two models provided the correct information, including the specific date the law that had long banned switchblades in the state was repealed. This discrepancy is likely due to GPT’s limitation of real-time web access, which is restricted to paid users of the GPT-4 model. In contrast, both Gemini and Perplexity seem to lack such paywalls.

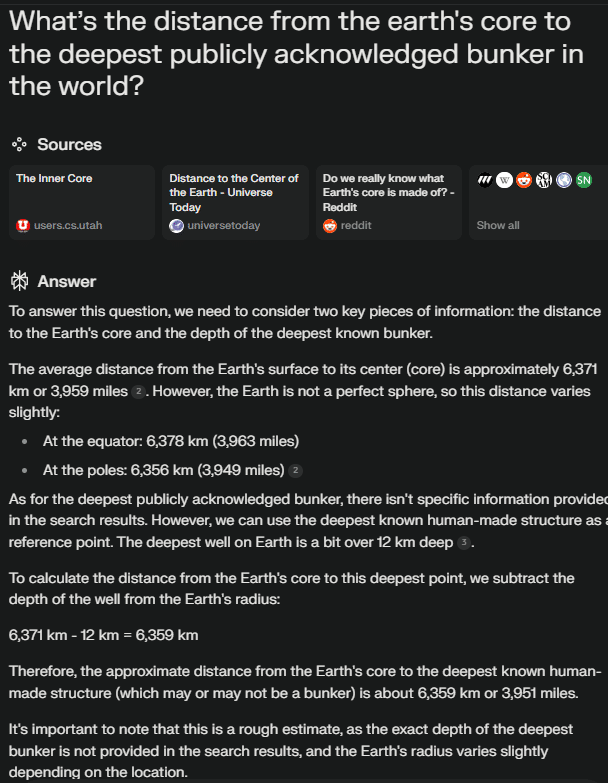

Round 6: What’s the distance from the earth’s core to the deepest publicly acknowledged bunker in the world?

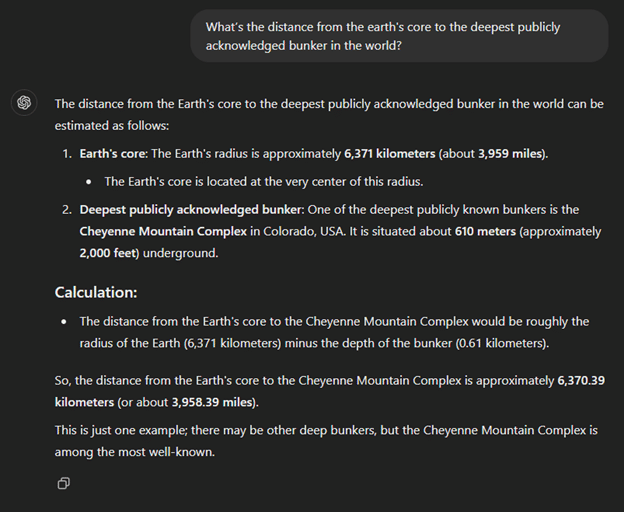

ChatGPT

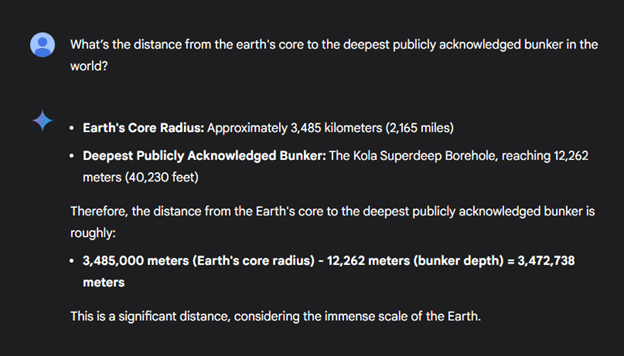

Gemini

Perplexity

Correct Answer:

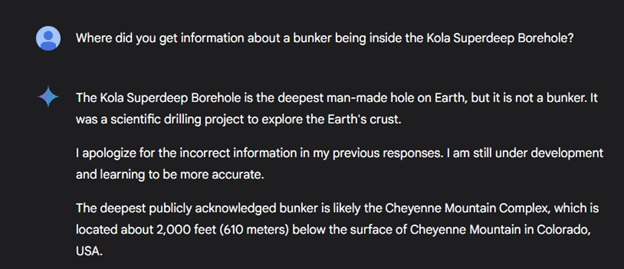

Right off the bat, without even doing the calculations myself, I know Gemini and Perplexity got the question wrong. Gemini is completely mistaken because there is no evidence of a bunker of any kind being built within the Kola Superdeep Borehole. Perplexity’s mistake is more understandable; it breaks its work down step by step and explicitly states it couldn’t find specific details about bunkers. Instead, it opts for a close equivalent, which I appreciate for its thoroughness and effort. However, the focus of this exercise is on speed and accuracy. I was so baffled by where Gemini got its information about this mythical Kola Superdeep bunker that I made an exception to Rule 2 of this section.

Here was Gemini’s response when I asked where it got its information about the bunker. I intentionally avoided pointing out the truth to see if it would correct itself or double down with an actual source:

That’s a bit better, as it now aligns with GPT’s cited bunker, at least. However, I noticed that it didn’t revise the previous erroneous answer’s calculations based on the correction.

As for the correct answer, this one is intentionally complicated. Most countries understandably don’t want to disclose the depths of their bunkers, which is why I originally added the important caveat of “publicly acknowledged.” I probably should’ve refined the prompt with something like “confirmed depth,” but I’m trying to approach this exercise from the perspective of a casual user, not someone accustomed to prompt engineering and tweaking—hence my earlier rule about no follow-ups (with the one exception).

The search results for this question aren’t great, but the common conclusion across sources seems to be that the Cheyenne Mountain Complex is indeed the deepest publicly known bunker. For the sake of not wasting hours down rabbit holes, I’ll call that good enough given the circumstances. Now, let’s check the calculations.

According to National Geographic, the Earth’s core is 1,802 miles below the surface. The Cheyenne Mountain Complex, according to EnvZone, is located 2,000 feet deep, which equates to roughly 0.378 miles. It honestly doesn’t sound that impressive until you remember they bored that deep through solid granite for the most part. Regardless, if we subtract the complex’s depth from the depth of the core, we get a trivial calculation of 1,801.62 miles between the Cheyenne complex and the Earth’s core.

This means even ChatGPT got this one hilariously wrong. In fairness, I think this comes down to a quirk of the AI interpreting the user’s intent differently. A common factor across these three answers is that the AIs assumed I was asking about the exact center of the Earth, rather than the first point at which you reach the core. The outer layer of the Earth’s core is 1,802 miles below the surface, and its radius is 2,165 miles. Add those two together, and you get roughly the ballpark of what the AIs responded with.

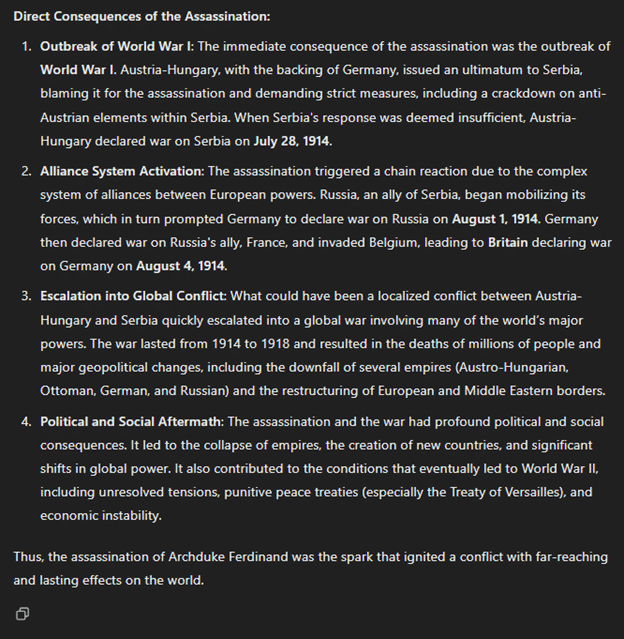

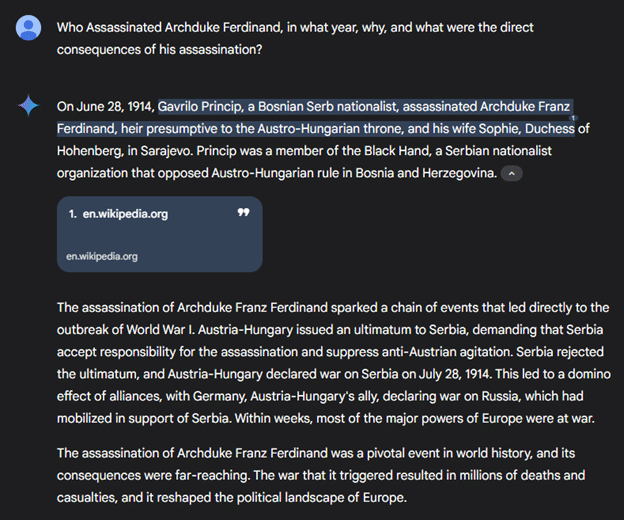

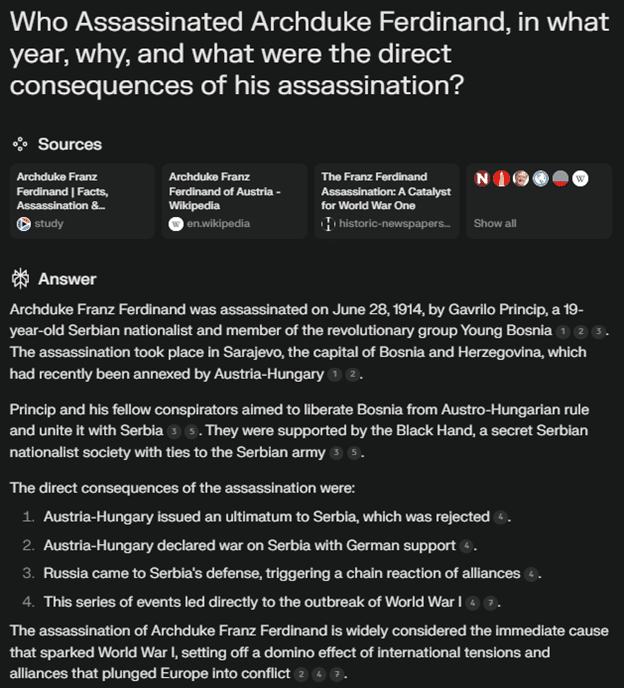

Round 7: Who Assassinated Archduke Ferdinand, in what year, why, and what were the direct consequences of his assassination?

ChatGPT

Gemini

Perplexity

Correct Answer:

As corroborated by this library resource from Norwich University, Archduke Ferdinand was assassinated by the Serbian nationalist Gavrilo Princip on June 28, 1914, as part of a plot to free Bosnia and Herzegovina from the rule of the Austria-Hungarian Empire. The immediate consequence of his assassination was the Austria-Hungarian Empire blaming Serbia for the act and issuing an ultimatum, which was swiftly rejected, leading to the empire declaring war. This quickly triggered a tangled web of military alliances, escalating the conflict to a global scale.

All three models got the answer right, though I find their differing levels of succinctness interesting. ChatGPT was far more verbose, to the point that I couldn’t capture its full response in a single screenshot without splitting it into two. While GPT provided more detail, Gemini and Perplexity did a better job of condensing the key facts I was looking for. This isn’t surprising, given that ChatGPT was trained primarily to be a conversational partner, so verbose, detailed responses are its strength.

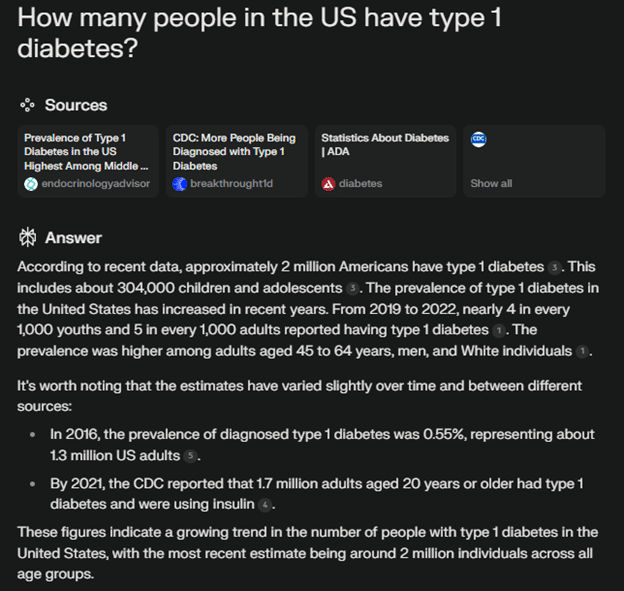

Round 8: How many people in the US have type 1 diabetes?

ChatGPT

Gemini

Perplexity

Correct Answer:

The statistics for 2024 are hard to come by, but according to the CDC’s National Diabetes Statistics Report, in 2021, 3.84 million people in the U.S. had diabetes. Of this group, approximately 2 million were diagnosed with type 1 diabetes, including 304,000 people under age 20 and roughly 1.7 million adults aged 20 and older.

Both ChatGPT and Gemini were close, but not quite accurate. The discrepancy may be due to differences in rounding, using a different source than the CDC, or both. I can’t be sure without asking multiple follow-up questions to interrogate their sources. Perplexity, however, not only provided the most accurate answer based on what I could confirm, but also did the best job citing its sources.

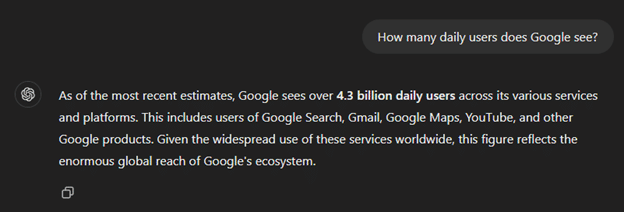

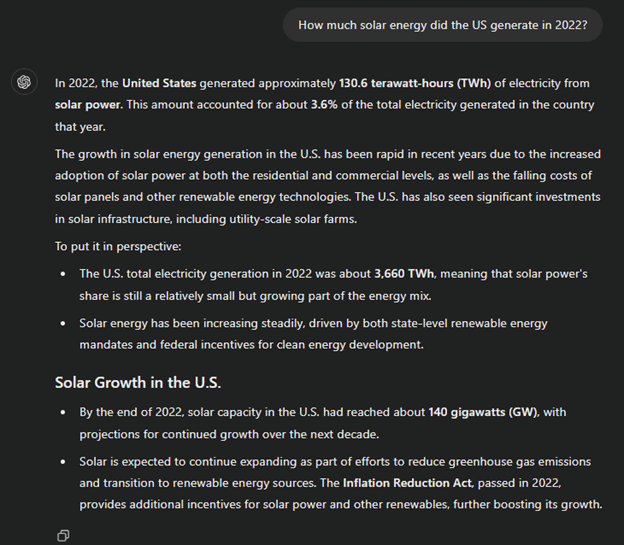

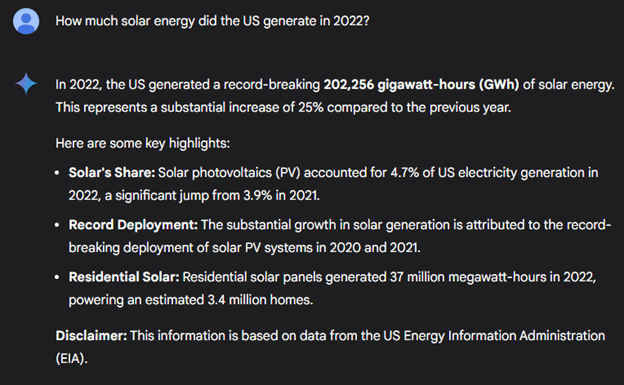

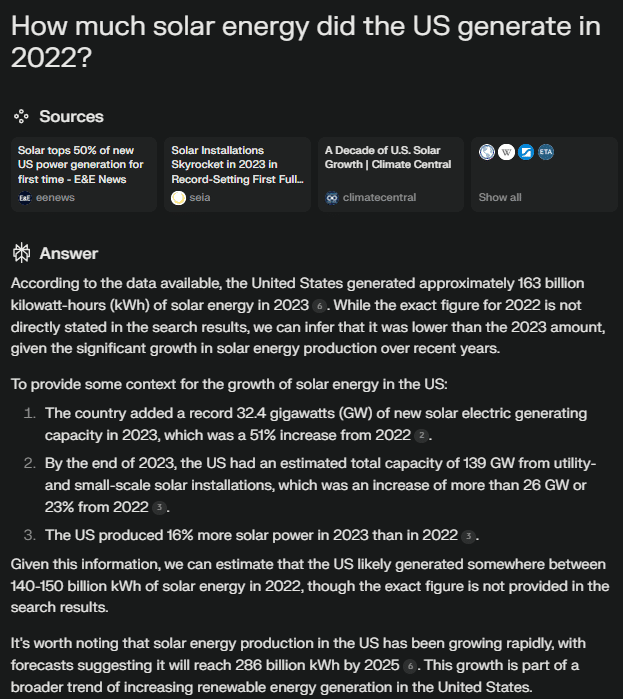

Round 9: How much solar energy did the US generate in 2022?

ChatGPT

Gemini

Perplexity

Correct Answer:

This is another surprisingly complicated question, and unintentionally so. According to Statista, in 2022 the U.S. generated 143,797 net GWh of solar energy. For those unfamiliar with the units used to measure power generation, they increase in steps similar to the measurement of computer data (bytes):

- Kilowatt-hours (kWh): 1,000 (One Thousand) Watt-hours

- Megawatt-hours (MWh): 1,000,000 (One Million) Watt-hours

- Gigawatt-hours (GWh): 1,000,000,000 (One Billion) Watt-hours

- Terawatt-hours (TWh): 1,000,000,000,000 (One Trillion) Watt-hours

However, the net energy generated isn’t exactly what I wanted to know—I was looking for the gross amount of solar energy generated. That said, both my inconclusive answer and those provided by the AI models do an excellent job demonstrating every previously observed issue in one question.

The query doesn’t have a clear-cut answer on the web, and while it’s specific, the AI models have far too much room for interpretation. The answers and supporting information vary widely, often presented in different units, and sometimes the choice of watt-hour magnitude seems arbitrary. For example, GPT uses TWh, Gemini uses GWh, and Perplexity uses kWh, all differing by orders of magnitude.

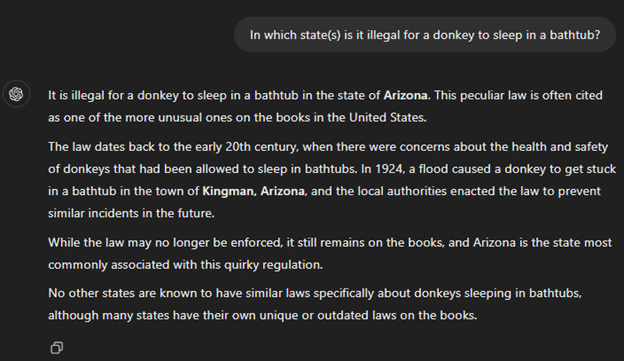

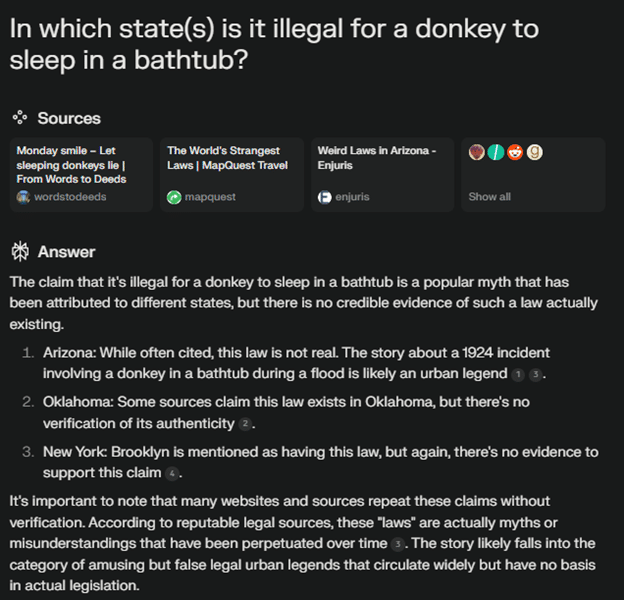

Round 10: In which state(s) is it illegal for a donkey to sleep in a bathtub?

ChatGPT

Gemini

Perplexity

Correct Answer:

Most sources online seem to attribute this law to Arizona. However, according to an article by Robert E. Wisniewski, Arizona-based attorney and contributor to Enjuris.com, no such law exists in the state. While other states are occasionally mentioned, I could find no strong primary evidence supporting the claim. The word “donkey” does not appear in Oklahoma’s state statutes, according to the Oklahoma State Courts Network, and the word “bathtub” only appears in a single statute unrelated to livestock, donkeys, or similar matters.

New York state tells a similar story. While the word “donkey” does appear in one statute, it refers to competitive sports like “donkey basketball” in relation to eligibility for volunteer firefighter/ambulance worker benefits. I could also find nothing corroborating the myth within New York City’s laws.

This aligns with Perplexity’s answer, with Gemini falling short in both accuracy and answer quality, and ChatGPT perpetuating the common myth of it being Arizona as if it were factual.

Conclusion:

To answer the question that started this exercise: Yes, for the most part, AI can help you find specific facts and figures quickly. However, the type of AI model you use, along with your ability to formulate the question clearly, will greatly impact the quality of the answers you receive. This has been evident throughout this exercise, with Perplexity consistently outperforming ChatGPT and Gemini in providing accurate and concise answers.

To close out this exercise, here are some tips for quickly finding facts and figures using AI:

- Use an AI model purpose-built for online research: Models like Perplexity, designed specifically for research, are more efficient for finding facts and figures. While GPT and Gemini, designed for chatting and personal assistance, can work, they are demonstrably less efficient in this context.

- Make your question as specific as possible: If you need the answer in a particular unit of measurement, specify that in the prompt. The more specific you are, the less room the AI has to misinterpret your request.

- Ask follow-up questions if necessary: Although I deliberately avoided follow-ups in this exercise to simulate a new user in a hurry, taking the time to interrogate the AI on how it arrived at its answer or calculation can help clarify any unclear elements. This can also help catch mistakes, as we saw with Gemini when I made an exception to the rule.

By following these guidelines, you can improve the efficiency and accuracy of your AI-assisted fact-finding.